Display AWS billing data graphically

One way using InfluxDB and Grafana

AWS offers a variety of tools and dashboards to understand the billing data of its services, from tracking to visualization to alerting. Many use cases are covered with the native AWS tools. However, in reality, many organizations and operations teams have very unique use cases for monitoring and alerting that require billing data to be considered. Some might be:

- Not be in the comfortable position of having to focus on just one cloud provider and unify different billing data sources or formats

- want to use their existing monitoring and warning system, which is tailored to their needs, also for processing billing data

- want to train their own machine learning application to find possible optimizations in their own or their customers' billing data.

Fortunately, AWS offers the possibility to export the billing data in a simple CSV format. This allows us to develop a customized approach to processing AWS billing data. In this article, you will learn how to process AWS billing records, transform them if necessary and transfer them to influxDB, a popular time series database. You can then graph these values using Grafana.

Identify and understand the source fields of the cost reports

Before we can start processing CSV data, we need to understand the meaning of the fields. Note that AWS itself offers a legacy format called "Detailed billing report" (DBR) and the current format called "Cost and Usage Report" (CUR). The CUR itself can contain more than 120 fields in its CSV file. Having the documentation at hand is definitely helpful: cost reporting. If you are looking for a detailed business intelligence report, you will need a data warehousing application. In this case, these reports can be imported and queried from Amazon Redshift Invoicing with AWS Redshift. However, since we want to create an alerting tool and an operational dashboard, we will be truncating the fields significantly so as not to break our monitoring infrastructure. Interesting values to consider from the CUR are:

- lineItem/LineItemType: The CUR contains not only on-demand costs, but also taxes, refunds, advance fees and more. If you only need the on-demand costs and want to leave the taxes to company controlling, filter this field to the value "Use".

- lineItem/ProductCode: the product code indicates the AWS service used, e.g. "AmazonEC2".

- lineItem/UsageAccountId: the account that used the resource

- lineItem/UnblendedCost: the costs for the respective item. Depending on your use case, there are also the fields UnblendedRate and UnblendedUsage as well as BlendedCosts/Rates, which indicate average prices for organizations. If in doubt, you should consult the documentation.

- identity/TimeInterval: The reports do not specify a timestamp of when the billed event occurred, but the interval on an hourly basis. We use this interval and extract a timestamp that is sufficient for a dashboard. For tracking the lifetime of a resource, the lineItem/UsageStartDate and lineItem/UsageEndDate fields may be more appropriate.

Planning the data model

InfluxDB is our database of choice in this example to store the data and serve as the backend for our visualization and monitoring. Another popular choice for this task would be Prometheus. InfluxDB divides the data into two categories: Tags and Fields.

Tags are used to create an index and partition data records, they are mainly intended to contain metadata and structure the data. The "actual" data is contained in fields. A dataset with fields and tags also needs a measurement name to group a set of values and a timestamp for each data point, as InfluxDB is a time series database.

Choosing the right amount of data for a monitoring sample is difficult to judge. An optimistic data analyst may want to collect all the data and formulate the use cases later. An operations engineer, on the other hand, may want to run their monitoring infrastructure in "real time" as much as possible, and smaller data sets are definitely easier to handle and less wasteful.

Extract, transform, load

Since we have several different input sources and possibly several different monitoring applications, we need to transform the data. For an incoming CSV file, we need to define which CSV fields should be used as tags and which as fields. The basis will be a custom schema:

csv_format = {

'dbr-cost-allocation': {

'tags': [

'productcode',

'linkedaccountname'

],

'fields': [

'totalcost'

],

'time': 'invoicedate'

},

"cur_report": {

'tags': [

'lineitem/lineitemtype',

'lineitem/productcode',

'lineitem/usageaccountid'

],

'fields': [

'lineitem/unblendedcost'

],

'time': 'identity/timeinterval'

},

....

Each section defines its own InfluxDB measurement, which specifies which CSV values are to be used as a timestamp, field or tag. All other CSV values are discarded. The examples above already illustrate the differences between the old DBR format and the newer CUR format, although both are native AWS billing records. In order to standardize both and create consistent dashboards, we will use another table to rename fields as needed:

map_fields = {

'linkedaccountname': 'accountname',

'lineitem/lineitemtype': 'chargetype',

'lineitem/productcode': 'productcode',

'lineitem/unblendedcost': 'totalcost',

'lineitem/usageaccountid': 'accountname'

}

An attentive reader will stumble across a line in the above list: unblendedcost is renamed totalcost, although it was stated above that unblendedcosts are costs of very specific items, in any case not a total sum. This only makes sense with the next section:

reduce_values = {

....

"cur_report":

"fields": (add, 'totalcost')

…

(Where map and reduce are higher-order functions: Fold_(higher-order_function, not Google's MapReduce in particular).

The above fields are combined with every other entry that has the same primary key using the above function. In this case, all non-combined costs (which have already been renamed in the listing above!) are added together to calculate the total cost. InfluxDB considers a unique key to be the product of tags x measurement x timestamp. Another possible use case would be to calculate the most expensive service usage by using a 'max' function instead of an 'add' function.

Now, to combine all of the above, we can create an algorithm that converts each CSV file and creates a custom data model represented as pseudocode:

for csv in csv_list:

reduce(rename(filter(format, csv)))

# apply a filter/schema for each csv row, rename the fields and

# finally sum fields

So now we have a plan, but we still have to do it:

Retrieving reports from S3

A key feature of AWS and other cloud providers is ease of use and the ability to perform almost any action with well-documented (and implemented!) APIs. For everything AWS related, the one-stop shop for Python is Boto3: boto3.amazonaws.com

Downloading a csv file is as easy as:

s3client.download_file(Bucket=bucket, Key=file_key, Filename=local_filename)

... if we don't have to take different file names, storage locations and formats into account. But we can use the same schema for the file query as for the data model. First, we determine whether a file in the s3 bucket is a billing report by matching the file name with a pattern; then we scan all files and return the list found.

regex_pattern = {

'3rd-party-preprocessed': '^\d\d\d\d-\d\d-\d\d.zip$',

#will match f.e.: 2019-01-01.zip

'native_dbr': '^\d\d\d\d\d\d\d\d\d\d\d\d-aws-cost-allocation-.*.csv',

#will match <AWS-account-id>-aws-cost-allocation-2019-01-01.csv

'cur_report' = "{}/{}/{}/{}-Manifest.json".format(prefix,report_name,billing_period_regex,report_name)

# will match CUR report path using the regex:

# billing_period_regex = '\d\d\d\d\d\d\d\d-\d\d\d\d\d\d\d\d'

}

...

finished = False

response = s3client.list_objects_v2(Bucket=bucket)

while not finished:

for c in response['Contents']:

if re.match(regex_pattern[csv_formatting], c['Key']):

result.append(c['Key'])

#more items to process?

finished = not response['IsTruncated']

if not finished:

logging.info("Fetching next 1000 items")

continue_token = response['NextContinuationToken']

response = s3client.list_objects_v2(Bucket=bucket,ContinuationToken=continue_token)

return result

The same as for file name pattern matching is probably also needed for all the other details that a programmer and/or data engineer highly values: timestamps and time zones, file encoding, CSV styles (not always comma separated...) and more.

For example, a CSV file may be formatted with ; as separator, Unix line terminator and latin-1 encoding, while the AWS native file uses the Windows line terminator, separated fields with , and utf-8 encoding. The following list illustrates this problem:

if '3rd-party-csv_file' in filename:

csv_file = file_obj.readlines()

csv_decoded = [ x.decode('latin-1') for x in csv_file]

dialect = csv.unix_dialect

dialect.delimiter = ';'

csv_reader = csv.DictReader(csv_decoded, dialect=dialect)

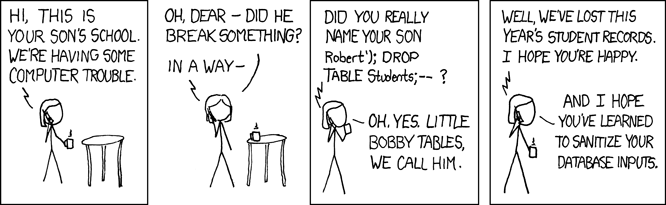

For someone who implements such an approach: Always remember Bobby Tables -

Graph AWS Billing Data

Once the data has been discovered, downloaded, extracted, formatted, renamed, added and transferred to influxDB, we can finally create fancy dashboards and sharp alerts. However, this is an art in itself and could be covered in detail in another blog article. However, since this article is titled Graphing AWS Billing Data, I'll show you a simple dashboard using the data model suggested above. The following query against influxDB in a Grafana panel:

You will get a nice graph that is already able to break down by either product, account or fee type. As you can see below:

From here you can create your own visualization for the KPI you need. The peaks you see in the charts are services that are billed on a daily basis, e.g. S3. If you only need the total cost per day, you can display this as an average per day to flatten out the peaks.